For the last 6 months I've been working away on my graphics engine - I initially wrote about it back in April. I've put about 20 days into writing it so far (give or take). I think I'm about ready to show where I've gotten to.

Well, I'll admit at first glance it's not very impressive, but it's a big step forward, for me at any rate. I've learned a good number of things that might be handy for folks who want to learn more about modern computer graphics.

First things first, what am I trying to do? Way back when, I wrote my first 3D graphics engine, pxljs. I've used it in a couple of projects some time ago. This was written in Coffescript(a variant of javascript at the time) and WebGL. I was quite pleased with it, but I always wanted to do something more like Openframeworks, or Cinder. If you play any computer games, you'll have probably heard of Unity, Unreal Engine 5 or Godot. All of these are game engines.

Now, I'm not crazy enough to think I'll be making the next Unity engine, that's for sure! But there's quite the community of engine makers out there; you can find many of them on the Graphics Programming Discord. There are several little engine projects out there, and I reckon I could join them, with a bit of hard work.

The goal is to create a game I can put up on itch.io, a demo I can submit to a compo and a set a useful utilities I can use on the way there. And have a fair bit of fun learning how modern computer graphics work.

I decided to use the two main tools - Vulkan and Rust. Vulkan is the latest computer graphics standard from the Khronos Group(the folks who look after OpenGL). It's the de-facto cross-platform library. The other competitors are Metal(if you are on a Mac) and DirectX-12(Windows only). I wanted something cross platform, so Vulkan it is.

Rather than use C++ - a very common choice for high performance graphics software (Java, C# and Python are not in the running), I decided to go with Rust. I'm keen to keep up my Rust skills as I quite like the language, and it's quite fast - on a par with C++. Most of all though, I really quite like cargo, or rather, all the infrastructure around libraries, projects and testing. I find it much quicker and easier to get projects off the ground. I'm also confident it will be easier to port my engine from Linux to Windows, when the times comes.

So why have I not gotten as far as I'd like? Well, Vulkan is notorious for being difficult to learn; it's much more complex than it's predecessor OpenGL, or perhaps it's more accurate to say it is broader - attempting to cover as many bases as it can. Graphics hardware is quite diverse these days, with phones, tablets and consoles being arguably more important than desktop. How do you write a library that can work over various different vendors and architectures? Vulkan does a good job (I think) at providing a unified framework. Getting a triangle on screen is quite the undertaking, but there are a lot of good tutorials out there. Once you've gotten over the initial hump, it does become easier.

It's worth having a quick chat about tools I think. I've been going through a phase where I've been re-evaluating all the tools I use for programming. Vim is fine, but what about Helix or Micro? Should I move away from VSCode and try Kate? I've ditched Zsh for Fish. All that stuff.

When it came to this project, I really fancied an IDE. I don't know at what point I stopped using IDEs. To be honest, there was never a really good one I thought. VSCode is fine for Windows but at the time I didn't like the idea of having a different IDE for each language I was using. Eclipse tried (and failed) to support many languages. XCode was Mac only. I've been away from IDEs for a while. It was time for a revisit.

I've settled on RustRover. JetBrains seem to make the best IDEs out there, so folks seem to think. I have no complaints so far. It was absolutely the right choice on this project. It's saved me a lot of time (believe it or not) when tracking down bugs. There is a lot to be said for an integrated debugger.

One absolute must is the program Renderdoc - a graphics debugger. It allows you to interrogate a frame to see why it looks the way it does. Renderdoc is quite frankly, amazingly useful!

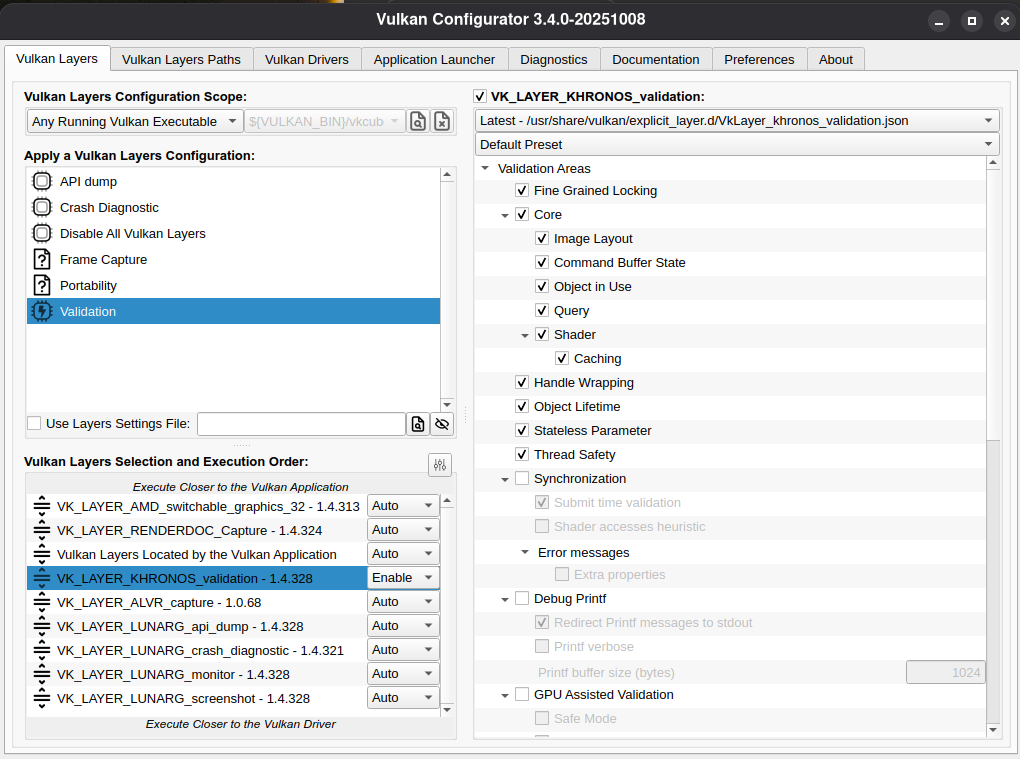

Another absolute must is the LunarG Vulkan SDK, if only for the Validation Layer. Related are the programs vulkaninfo and vkconfig.

For example, one can control the Vulkan driver options using the following command:

vkconfig gui

... which brings up a handy gui for tweaking the various Vulkan settings

How do you even begin with a graphics engine? Well, there are a number of books out there: Game Engine Architecture by Jason Gregory goes into a lot of detail. For now though, we can start with just one side of the engine - the graphics. Specifically, we'll be creating a Scene Graph.

If you've ever used Blender, you'll notice that all the objects in a scene - lights, meshes and so forth - are presented in a Tree. This is essentially what a scene graph is - a way of specifying how all the elements in a scene will fit together.If we think about - say - a scene with a character, standing on the ground outside, holding a laptop, we can imagine a hierarchy based on the physical space. The ground is fixed, the person is relative to the ground and the laptop is relative to the character holding it. If we move the character, we expect the laptop to move. Now, this seems more like a physics approach than a graphics one, but looking at things this way makes some of the maths a little more elegant. We can represent all the object locations with a 4 by 4 matrix. By arranging them in a hierarchy, we can simply multiply the object's matrix by its parents' matrices and everything looks right. We can also add things like textures and lights to our scene graph. For example, if two objects share the same texture, we can include the texture in the parent and all the object will inherit it. Same goes for a shader or a light source.

A scene graph is made up of nodes. At the top is the root node. Beneath that are an optional number of child nodes, which can also have children (and so on). At minimum, each node has a transformation matrix - a 4x4 matrix that represents a spatial..well.. affine transformation like a move, scale, shear or rotation. A node can have optional geometry and optional shaders, as well as a camera (a perspective transform) or any number of lights. Nodes can be added or removed from the hierarchy at any time.

We can traverse this tree-node structure automatically and generate graphics chunks (a GChunk in my code) that can be sent to Vulkan for processing. Lets say our tree has two main branches, each one having two meshes and a shader each. We can divide these into two groups, based on the different shader. Lets say the first branch has two meshes with the same kind of vertex structure. We could group these together into the same graphics work chunk or drawing pass. Now if the second branch has one shader but two different sorts of vertex data, we can create two separate drawing passes for a total of three chunks of graphics processing.

This is where we need to consider Vulkan and how it deals with drawing things to the screen. We need to find the maximal unit of graphics work we need to achieve our goals. That is a geometric object with optional normals, faces etc being draw with its own shader, transformation matrix and optional texture(s) and lights. It's time we looked at Vulkan proper.

We should recap a little around Shaders. They are one of the most important parts of the graphics engine; indeed they are pretty much the core of a demoscene production. They are small(ish) programs that sit on your GPU and alter the stage of the pipeline they are responsible for. So a vertex shader can mess around with the vertices sent to the GPU, whereas a pixel shader will modify the pixels that come its way, once all the other stages have finished.

Shaders are written in a number of languages; the two main ones being HLSL and GLSL. As a veteran of OpenGL and WebGL, I'll be using GLSL exclusively. In both of these languages, shaders just worked. In Vulkan however, you need to compile them into the Standard Portable Intermediate Representation (or SPIR-V) before Vulkan will use them. It's a tad annoying but we can get around that easily enough.

Shaders will form the basis of our search for a GChunk. I definitely want to be using more than one shader on screen at a time. There is an approach where one can use what is known as an uber-shader (which I have considered) but as any programmer will tell you, you inevitably want more than one of anything and shaders are no exception ;) For example, I might want one shader to render volumetric clouds, while another takes care of my ground scene, while a third helps with the 2D UX elements like text. This is where we start in building our engine. Lets find out how we can get any number of triangles, dynamically on screen, each with their own shader.

A contradiction in terms, Vulkan Basics perhaps? Well, I certainly can't go into Vulkan in-depth here. For starters I still don't know it well enough. However, there are plenty of excellent tutorials and books on Vulkan. I'll list the ones I've used here:

There is one problem with most of these resources however; they don't describe how to build a scene. If you want a single triangle on screen, or a model with textures you'll find plenty. What you won't find as easily are examples of multiple shaders and geometry representations together in one application. Now this might be because once you've done a single triangle, the rest is obvious and I'm just dumb. It's possible - I've had a lot on of late so I haven't been as focused as maybe I should have. Nevertheless, maybe some of you might be struggling in the same so I'm going to approach this section with multiple draw calls in mind.

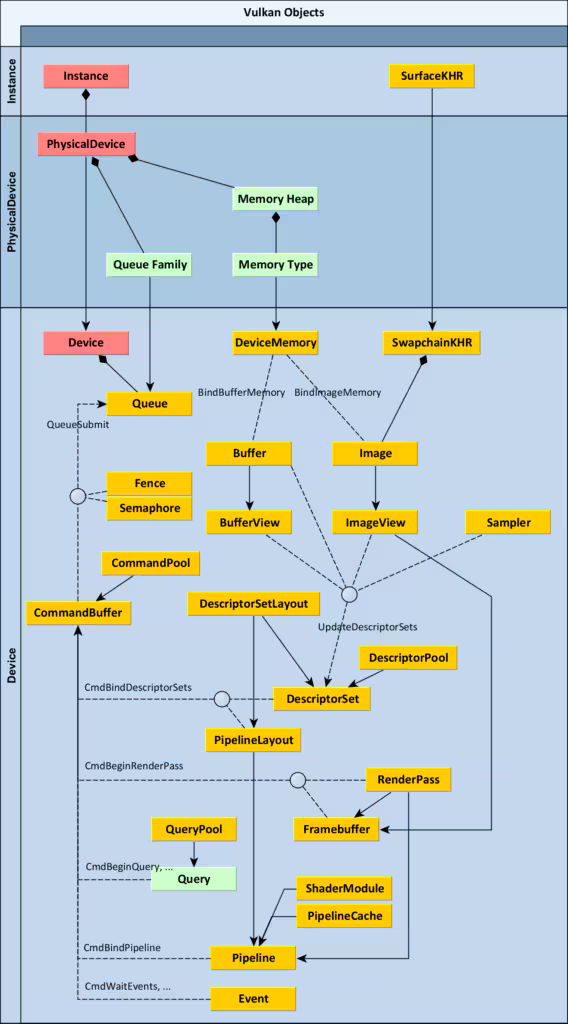

I find the following two diagrams really helpful when understanding Vulkan.

This first diagram is really quite handy. We can see the various elements that make up Vulkan and the relationships between them. At the top of the diagram (really, it should be the bottom) is the instance and the physical devices. For the most part, I haven't needed to worry about these too much. The SurfaceKHR is a little more complicated in that we need to pick some sort of windowing surface provided by our operating system. This part I do need to worry about as I'd like to support Windows, Linux and MacOSX.

The boxes in green give away a large part of how Vulkan works. It's all about memory types, buffers and queues. We have memory for images, commands, geometry and such. We need to setup these different memory types on our GPU and CPU devices. Queues do the work, often in parallel together. Queues have certain capabilities; these with the same capabilities are grouped together in families.

Further down we begin to get to odd stuff. Descriptor Sets, as I understand them, describe all the things we are pulling together, like the vertex organisation or the texture we want. These are combined with a Pipeline (which has a particular Layout). It is here where Shaders are added, right at the bottom of the diagram. These are combined with commands in a Command Buffer which is sent to a queue to be processed. It's a lot to take in, but from a more aerial view we are describing resources in memory then passing them to a queue along with the commands and pipeline we want in order to render them to the screen.

Over to the right we have something called the Swapchain. This is a special thing that deals with double buffering (sort-of, along with the framebuffer and RenderPass) and the memory allocated to the actual screen by the OS. For now, I'm going to ignore it and assume it's just doing it's thing.

The key thing to note is Vulkan is very parallel and somewhat asynchronous. Commands are submitted from the CPU to the GPU in one order, but a command may have started and not finished by the time the next one is sent. This is because the CPU is of course, separate from the GPU. We need to be careful with our synchronisation. This is perhaps the biggest difference from OpenGL. If we bear in mind that we are submitting commands to multiple queues, that means the GPU is most likely processing multiple things at once and we'll occasionally need to wait till one process reports it has finished before starting the next.

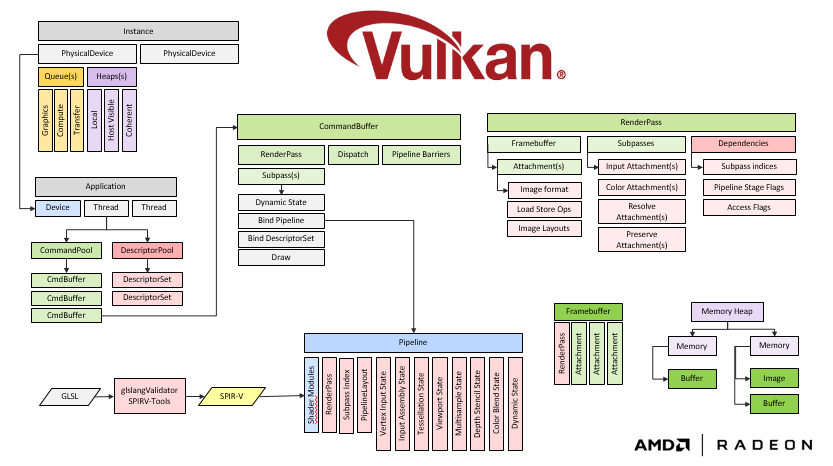

This second diagram shows similar information to the first, but breaks it up a little differently. At the top left we have the Instance and Devices again. Most of that we can take care of with some boilerplate. The application section on the middle left of the diagram is more interesting. The Descriptor Sets make an appearance again. We'll need quite a few of these for the various combinations of resources we might want to use.

In the centre of the diagram we have two important sections: the Command Buffer and the Render Pass. Looking at the Command Buffer we can see that it has a render pass where a Pipeline is bound. A render pass may have a number of subpasses; subpasses will come in handy later on when we come to Deferred Rendering. The Pipeline at the bottom of the graph is where our shaders come in. So in our scene graph approach it looks like our graphics chunk will consist of its own pipeline.

The RenderPass on the right of the image is less important to us at this stage, but will become very important when we perform multiple passes over the same geometry, combining them to form the final image in a Deferred Renderer.

We can define our GChunk then as a being a pipeline with it's own shader, pointers to buffers and memory, and set of drawing commands. Each GChunk records its commands into a single, shared command buffer. This Command Buffer is processed by a number of queues, all of which are held in a Vulkan Context. This master context looks after the devices, pools, RenderPasses and Framebuffers.

One particular buffer is worth mentioning here - the Uniform Buffer Object (UBO). This particular buffer is how we get our data into our shaders. Each GChunk will have a vertex and fragment shader attached to it. These shaders will map to a UBO and copy data from them. This is how we'll get data from our Tree-Node Scenegraph into the shader.

Firstly, let's dispense with the memes around Rust. It's gotten a lot of hype from advocates (some say zealots) and therefore a lot of push back. I've been working with it on-and-off for a while now; I find that it's a pretty good tool for high-performance, lower level work such a computer graphics. Rust's main selling point is it's memory safety. According to OWASP and CWE, some of the biggest security problems are due to buffer overruns, invalid memory reads and writes. Rust has started to enter the Linux Kernel and may have some support from the US Government and Military, arguably because of it's focus on more secure code through fewer memory bugs. It's certainly not a magic bullet, fix-all solution, but it might help improve things in a domains dominated by the C and C++ languages. But who knows?

Indeed, in the case of Vulkan, much of the code is marked as unsafe, which means you don't get any of the nice guarantees Rust tries to make. So what's the point of using it then over C/C++? Well, I can think of a few reasons, some personal, some applicable more broadly:

Ultimately, I don't see many reasons not to use Rust save for one - it isn't quite as mature as C or C++, despite being around for over a decade. This does give me pause for thought. However, in graphics land, much of the heavy lifting will be done by Vulkan once the basics are done. I think the benefits of learning a new language with some modern features and good cross-platform support outweigh the negatives.

I mentioned that Vulkan supports a lot of parallelism, which it expects you - the programmer - to deal with. All graphics pipelines are highly parallelised but Vulkan doesn't hide this. Indeed, one can be recording commands to multiple buffers, whilst rendering to the screen in separate tiles all at once! It can get quite complex.

Synchronisation in Vulkan is a big deal, and I still don't fully understand it. However, in a nutshell you have two main things to deal with:

My advice on this is have a read of the following links when you start thinking about Vulkan synchronisation:

On the Rust/CPU side, we definitely want at least two threads of control. The main thread is the first one to start and will control the window, the events and the Vulkan drawing commands. The second thread, the update thread, will take care of adding and deleting nodes, updating the UBOs and performing any other CPU side operations. This keeps the drawing nice and smooth, but does mean we need to watch out for race conditions and thread safety.

Rust has a number of built-in memory guarantee devices one can use. This write-up goes into detail about which ones to use. You can think of guarantees a bit like Smart Pointers in C++, only they do a little more than just count references to some memory. Roughly speaking, the main ones are:

These two don't allow you to mutate (change, basically) whatever they are pointing to, so we need a few more options:

A Copy type is a type whose stack representation can be copied without breaking Rust's memory sharing rules1. My understanding is that anything that can be copied in one go is a Copy type. I.e if a struct just has numbers and some fixed length items, it's fine. But if there's a pointer to something else, then it's not a Copy type.

So RefCell<T> should be enugh right? Well, not quite. We'll definitely be needing some threads in our program, so we need some extra support:

Throughout my code, I've tended to find I use Arc<Mutex<T>> a lot when sharing items between the drawing thread and the update thread. This does have a performance cost but if that becomes a problem I can always use some of the other types that are available.

One useful debugging function in Vulkan is the Layers feature. As it sounds, layers can be placed on-top-of the standard Vulkan library in order to add or alter some functionality. The most useful layer I've found is the Validation Layer. When activated, this layer prints out a number of additional warnings and errors relating to Vulkan specifically. It's helped me with many things including:

There are a number of other layers available that one can use. The LunarG Crash Diagnostic is quite useful; build from source and place in the correct directory and you'll get some useful output if (when?) your Vulkan instance bails out.

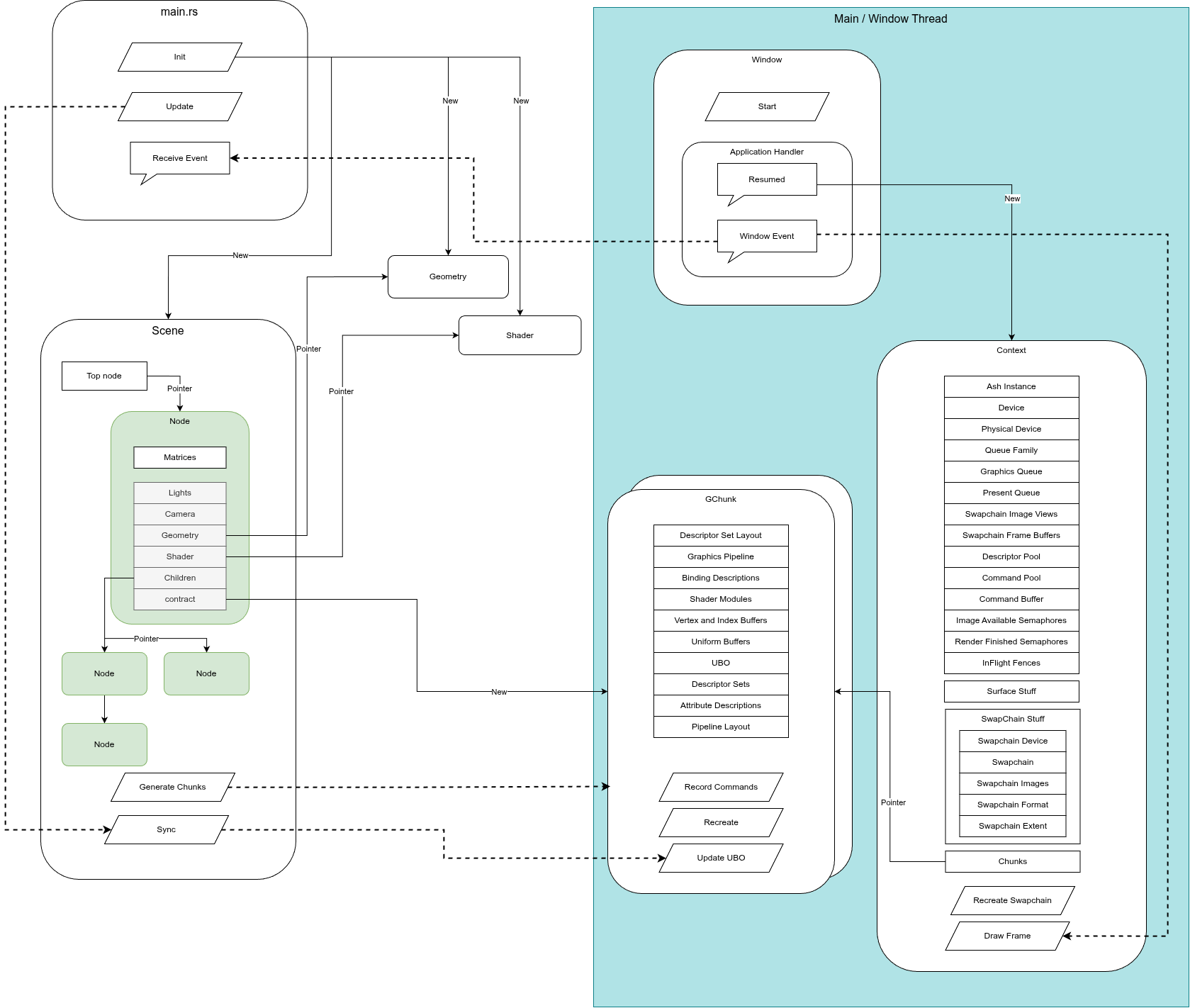

Here is big image! You might need to click on it to be able to read it!

There's not that much to it really ;^_^ Honest! The main points are:

That's pretty much it. A fairly simple architecture and it seems to work. I've yet to sort out deleting nodes, or grouping them together in a single call. That will definitely come in the near future. For now though, we have the basics of drawing multiple things to the screen.

Well, it's been a slog and no mistake. I'm glad I stopped to write this blog post as I needed a break! I've read that it takes a while to get anything on screen, getting one's understanding up to the level where you can do fun stuff. I'm almost there I think.

Last month I went to Play Expo and got chatting with Jeff Minter. He's been working on a big engine himself, so I asked him how he keeps going with it. He told me that the main thing was that at each stage of development, he could put out a product or useful program. That way, not only could he make a bit of money or have something useful, but it would help direct the course of development. This seems like a great idea to me (and who am I to argue). So then, I think the next steps are:

Yep, a useful tool would be a shader editor, a bit like Bonzomatic. There are many of them, but I think having my own could be quite useful for things like demoscene productions, testing new effects and such. I can certainly do that now with the code I've already written.

It's taken quite a lot of time to get to this point. My goal of a game on itch.io seems a long way off, but it will happen!